On January 27, DeepSeek released OCR 2 as an open-source model. After analyzing their technical report, I believe this represents a systematic shift in how AI understands visual data. Instead of simply increasing the number of parameters, DeepSeek focused on fundamental architectural changes to improve performance beyond the limits of traditional Vision-Language Models (VLMs).

DeepSeek OCR 2 is More Than Just Text Recognition

DeepSeek OCR 2 is a next-generation Vision-Language Model with 3 billion parameters. It differs significantly from traditional tools like Tesseract or basic visual models. OCR 2 prioritizes two specific goals:

- Correct Reading Order: It maintains the proper sequence for multi-column text, footnotes, and the relationship between headers and body text.

- Stable Layout Structure: It ensures that tables, lists, and mixed content are formatted into usable structures.

If you need to process PDF scans for database entry, clean data for RAG systems, or parse complex financial reports, OCR 2 provides a high level of accuracy and logical reconstruction.

Architectural Innovation: Why is DeepSeek OCR 2 So Efficient?

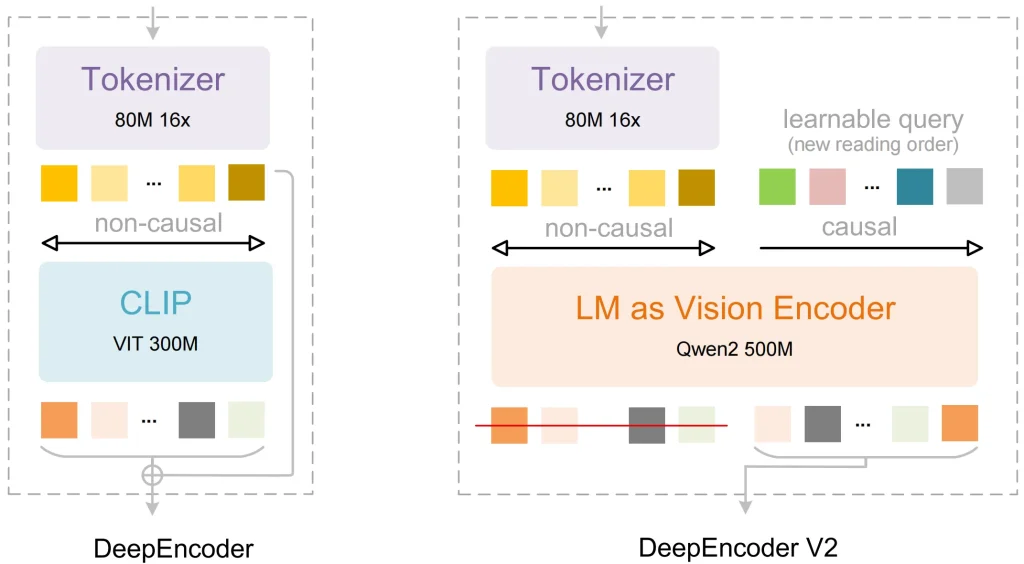

Replacing CLIP with a Language Model

Most older visual models use CLIP as the image processing component. CLIP was designed to match images with text labels. However, it lacks the ability to understand the logical relationship between different parts of a dense document.

The DeepSeek Soluzione: They used Qwen2-0.5B (an LLM-based architecture) as the core of the vision encoder.

The Benefit: Because the encoder is based on a language model, the visual tokens have a basic reasoning capability during the initial stage. The model can identify which pixels belong to a header and which belong to a table boundary, which leads to more accurate data processing.

DeepEncoder V2 and Visual Causal Flow

This is the most significant technical breakthrough in OCR 2. Many models process images in a fixed grid from top-left to bottom-right. This fixed order often causes errors when the model encounters complex tables or multi-column pages.

The DeepSeek Soluzione: They added Visual Causal Flow to the DeepEncoder V2 component:

- The model first collects the global information of the entire page.

- It uses learnable queries to reorder the visual tokens.

- It sends this logically organized sequence to the decoder to generate text.

This allows the model to gather information based on the actual meaning of the data. Since the information is organized by layout and semantics during the encoding stage, the final output is very stable.

| Metrico | Traditional OCR Models | DeepSeek OCR 2 |

| Reading Order Error | High (struggles with columns) | Significantly Lower (Edit distance dropped to 0.057) |

| Token Compression | Low (thousands of tokens per page) | Very High (256 – 1120 tokens per page) |

| Stability/Accuracy | Prone to repetition or errors | 97% Accuracy (at 10x compression) |

Moving Visual Encoding Toward Reasoning

Experts describe OCR 2 as a “language-model–driven vision encoder.” This means the encoder focuses on spatial relationships and structural information rather than just extracting basic visual features.

The Results:

In the OmniDocBench v1.5 professional test, OCR 2 achieved a score of 91.09. This is a 3.73-point improvement over the previous version. Most of the progress occurred in the accuracy of reading orders and the handling of complex layouts.

How to Use DeepSeek OCR 2: 3 Fast Deployment Methods

DeepSeek has released the model weights on Hugging Face. You can use these three methods to access the model for production or research:

Method 1: FastFine-Tuning via Unsloth(Recommended)

Unsloth is optimized for OCR 2 and significantly reduces memory usage.

from unsloth import FastVisionModel

import torch

# Load the model

model, tokenizer = FastVisionModel.from_pretrained(

"unsloth/DeepSeek-OCR-2",

load_in_4bit = True, # Use 4-bit quantization to save memory

)

# Prompt template

prompt = "<image>\n<|grounding|>Please convert this document to Markdown and extract all tables."Method 2: High-Performance Inference with vLLM

This is the best choice for organizations that need to handle many requests at once.

- Settings: DeepSeek recommends setting the

temperatureto 0.0 for the most consistent results. - Supporto linguistico: You can specify the target language in the prompt. It supports over 100 languages.

Method 3: Standard Hugging Face Transformers

For maximum flexibility, use the standard library:

- Install the requirements:

pip install transformers einops addict easydict. - Load the model:

AutoModel.from_pretrained("deepseek-ai/DeepSeek-OCR-2", trust_remote_code=True).

Mancia: When you process tilted scans, rotating the image by just 0.5 degrees to straighten it can help the model produce even better results.

From my long-term observation of the AI industry, DeepSeek has consistently acted as a pioneer in optimizing core algorithms. I noted that their first OCR model in October 2025 already used token compression to improve efficiency.

OCR 2 is not just a performance update. It represents a fundamental change in how AI processes visual logic. By using a language model architecture for visual encoding, DeepSeek has increased the depth at which AI understands complex data. I believe these efforts demonstrate a high level of forward-thinking. This method of organizing information at the foundational level allows AI to read in a way that is more similar to human logic, and it provides a new standard for accurate data extraction in the future.