I evaluate GLM-5 primarily as an engineering model, not as a general chat model that only needs to “sound right.” My approach is straightforward: I first use widely referenced public benchmarks to confirm where GLM-5 sits in the top tier, then I validate those signals with a repeatable workflow to check whether GLM-5 is genuinely more stable and practical for real engineering tasks. Based on that process, my conclusion is that GLM-5’s progress is not only about scale—it also advances long-context efficiency, agent training, e engineering-grade output stability at the same time. That combination helps explain why it performs close to leading closed models on both composite leaderboards and real-world agentic evaluations.

I Use Two Metrics to Establish GLM-5’s Position

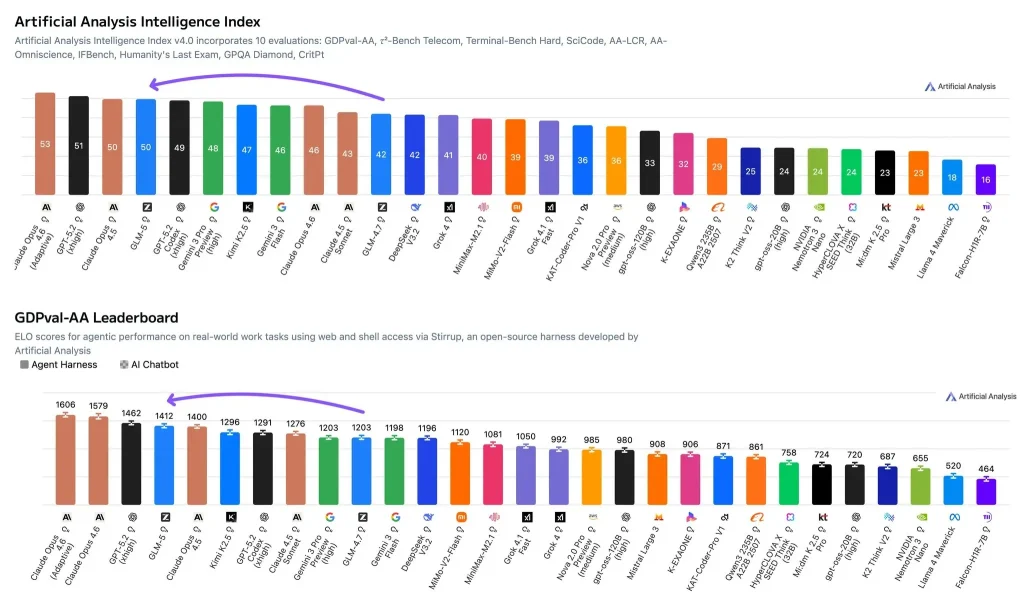

To avoid relying only on subjective impressions, I anchor my assessment of GLM-5 to two complementary Artificial Analysis evaluation tracks:

- Artificial Analysis Intelligence Index (composite capability score): GLM-5 scores 50, which places it in the top tier. Higher scores include Claude Opus 4.6 (Adaptive Reasoning) at 53 and GPT-5.2 (xhigh) at 51, while Claude Opus 4.5 is also in the 50 range. This index aggregates multiple evaluations into a single score that reflects overall strength across reasoning, coding, and related capabilities.

- GDPval-AA (real-world knowledge-work agentic evaluation): GLM-5 has an Elo rating of 1412. In plain terms, Elo is a head-to-head relative strength score—higher Elo means a higher overall win rate across the same task set. GDPval-AA is designed to resemble real work (e.g., retrieving information, analyzing it, and producing deliverables), and it allows models to operate in an agent harness with tool access.

Taken together, these two metrics point to a clear hypothesis: GLM-5’s advantage is unlikely to come from isolated “test-set tricks.” It is more likely to come from completion quality and stability on complex, multi-step tasks.

How I Test GLM-5: Three High-Frequency Engineering Workflows

My hands-on testing is closer to an engineering acceptance check than a “prompt showcase.” I focus less on whether the model can produce longer explanations and more on whether it can deliver correct, usable results under constraints. I primarily test three workflow types:

- Long-context software engineering tasks: I provide a longer code segment plus documentation constraints, and require cross-file issue localization and a minimal-change fix proposal.

- Incremental code edits: I require changes limited to a specific function or module, keeping the rest of the structure intact, and I ask for a diff-style patch plus regression risks.

- Tool-centric task chains: I structure tasks as retrieve → synthesize → produce a deliverable, and I check whether the model can request missing inputs clearly and propose a reliable retry path when something fails.

I use these workflows because improvements on the Intelligence Index and GDPval-AA should show up most clearly in long chains, tool usage, and engineering deliverables rather than in short, single-turn prompts.

GLM-5’s Core Breakthroughs: A Structural Upgrade from Three Reinforcing Changes

DSA Sparse Attention Makes Long Context Economically Sustainable

In public materials and the paper, GLM-5 emphasizes adopting DSA (DeepSeek Sparse Attention). In simple terms: when inputs become very long, the model does not need to spend equal attention compute on every token. Instead, it allocates more computation toward tokens that are likely to be more important and relevant, reducing training and inference cost while aiming to preserve long-context quality.

In my tests, the practical implication is consistent with that design goal: as context grows, latency tends to increase more smoothly, e output coherence tends to remain more stable. This matters in engineering settings because codebase exploration, accumulating requirements, and long-horizon execution naturally expand context over time.

Asynchronous RL Infrastructure (“slime”) Fits Long-Horizon Interaction Better

GLM-5 publicly describes an asynchronous reinforcement learning setup that decouples trajectory generation (rollout) from training to improve throughput and efficiency. A practical way to interpret this is that the model can learn more effectively from large volumes of interaction traces about how to complete tasks end-to-end, rather than only learning to produce answers that look plausible in isolation.

In hands-on workflows, I see this most clearly in failure handling: instead of looping on unproductive text, GLM-5 more often returns to constraints and proposes new executable steps, and it is more explicit about which inputs are missing.

Training Goals Shift Toward Agentic Engineering, Not Single-Point Skill Gains

GLM-5 explicitly positions itself as moving from “prompt-driven coding” toward agentic engineering. I interpret this as a training objective that extends beyond writing code or solving isolated reasoning problems: the model needs to plan, execute, and reflect over longer horizons, producing results that are usable in engineering workflows.

This framing helps explain why GLM-5 can be strong on GDPval-AA (knowledge-work agent tasks) while also scoring competitively on the composite Intelligence Index.

Why GLM-5 Still Ranks “Just Behind” Closed Flagships: The Gap Is Smaller, But Not Zero

GLM-5 Is Already in the Same Top-Tier Score Band

UM 50 on the Intelligence Index suggests there are no major weaknesses across the aggregated evaluations—otherwise it would be difficult to sustain a score at that level. It sits in the same band as Claude Opus 4.5, and slightly below Claude Opus 4.6 (Adaptive Reasoning) and GPT-5.2 (xhigh).

GLM-5 Is Close to Flagships on Real Knowledge-Work Agente Tasks

Um Elo of 1412 on GDPval-AA implies strong relative win rates on tool-enabled knowledge-work tasks. For deployment decisions, this is often more predictive than static accuracy on a narrow benchmark, because many production scenarios involve retrieval, analysis, writing, and tool coordination.

Remaining Differences Show Up in Extreme Difficulty and Policy Maturity

Closed flagships often retain advantages in policy maturity: more consistent self-checking, more reliable refusal boundaries, and fewer edge-case errors. GLM-5 can approach their level, but for a subset of complex tasks it may still require clearer constraints or stronger system-level guardrails to deliver consistently.

Advantages I Confirm in Practice: GLM-5 Behaves More Like an Engineering Copilot Than a Chatbot

More Reliable Incremental Edits, Less Unnecessary Rewrites

When I require localized changes while preserving the surrounding structure, GLM-5 more often produces targeted replacements or diff-style edits instead of rewriting entire modules. This reduces review overhead and makes regression risks easier to manage.

Better Constraint Consistency Over Longer Task Chains

When I split a task across multiple turns and enforce strict constraints from earlier steps, GLM-5 is more likely to keep those constraints consistent as the context grows, reducing contradictory assumptions.

More Executable Tool-Chain Outputs and Better Recovery After Failures

In retrieve → synthesize → deliver workflows, I focus on whether the model can produce executable steps and a clear “missing inputs” checklist. GLM-5 more often drives the workflow forward rather than staying at the explanation layer.

Limitations to Know Up Front: What Can Block Production Adoption

Deployment and Systems Costs Are Still High

GLM-5 is a flagship-scale MoE model. Even if only part of the model is activated per token, self-hosting still requires substantial work across memory planning, concurrency scheduling, KV cache strategy, quantization, and inference engine compatibility.

It Will Not Automatically Win Every Specialized Vertical

The Intelligence Index and GDPval-AA lean toward general reasoning and knowledge-work tasks. If your domain is highly specialized—e.g., strict compliance workflows, niche formal math proofs, or extremely fine-grained style control—you should still run targeted A/B tests before committing.

A Strong Model Does Not Replace Strong Systems Engineering

In agentic deployments, the most common failure is not “the model cannot answer,” but “the execution chain is not controlled.” Tool permissions, security isolation, observability, retry logic, and evidence verification remain necessary to turn model capability into stable production performance.

When I Would Prioritize GLM-5

If my goal is for a model to carry a meaningful part of an engineering workflow (not just produce one-off answers), GLM-5 is a top-tier candidate, especially for:

- Long-context engineering tasks: cross-file debugging, refactoring, complex issue localization

- Tool-centric workflows: retrieval, scripting, data synthesis, document deliverables

- Open-weights requirements: on-prem deployment, customization, and tighter cost/control boundaries

If your workload is dominated by short Q&A, extremely cost/QPS sensitive, or you operate under very strict compliance boundaries without appetite for system-level guardrails, I would start with lighter models or closed flagships as the baseline and add GLM-5 only if it delivers a clear return.