Anthropic officially released its flagship model, Claude Opus 4.6, on February 5, 2026. As a significant iteration over Opus 4.5, this model introduces technical optimizations in logical reasoning depth, long-context handling, and autonomous agent workflows. From a professional perspective, I will analyze the technical evolution of Claude Opus 4.6 and its practical utility in addressing critical business pain points.

How Claude Opus 4.6 Solves Real-World Challenges

In my observation, the primary cost for AI users is often not the initial query, but the subsequent “clarification and rework” required due to imprecise results. Claude Opus 4.6 addresses these inefficiencies through several targeted improvements.

Reducing Iterative Refinement in Long-Document Analysis

For the average user, common friction points include:

- Organizing fragmented materials into structured reports or presentations.

- Conducting comparative research and drafting strategic proposals across multiple sources.

- Maintaining continuity in long-running projects (e.g., iteratively updating a version over a week).

Anthropic emphasizes that Claude Opus 4.6 excels at decomposing complex requests into actionable steps. Its improved performance in long-context retrieval directly combats “context rot“—the phenomenon where model adherence and consistency degrade as the conversation grows longer.

Eliminating High-Frequency “Context Switching”

Professional users often suffer from a “context switching” tax—constantly jumping between Excel, PowerPoint, and document editors. This fragmentation disrupts focus and complicates information management.

With the launch of Claude Opus 4.6 on Microsoft Foundry, the model now features deep integration with the Microsoft ecosystem. It can autonomously clean and format data while generating presentation architectures natively. This reduces manual data migration and ensures a more coherent workflow.

Enhancing Stability in Long-Cycle Engineering Tasks

In coding scenarios, the true pain points reside in multi-step engineering activities: requirement decomposition, scope control, cross-file consistency, and complex debugging.

The Claude Opus 4.6 upgrade focuses on careful planning and sustained agentic performance. It is designed to be more reliable within large-scale enterprise codebases, specifically excelling at finding its own logic errors during code reviews. AWS Bedrock has highlighted that Claude Opus 4.6 is purpose-built for these long-cycle projects, requiring significantly less human supervision in autonomous agentic workflows.

The Technical Innovation of Claude Opus 4.6

I categorize the technical evolution of Claude Opus 4.6 into three pivotal innovations that shift the LLM paradigm from reactive response to proactive planning. These technical foundations explain why the model effectively resolves the aforementioned pain points.

Adaptive Thinking: Dynamic Reasoning for Efficiency

This is the most representative innovation of Claude Opus 4.6. Historically, models applied the same computational weight to simple translations as they did to complex proofs. The Adaptive Thinking mechanism allows the model to dynamically adjust its reasoning depth based on task complexity.

- Effort Parameter Control: The API offers four levels:

Bajo,Medio,Alto(default), andMax. - Performance Impact: In

Bajomode, the model prioritizes minimal Time to First Token (TTFT) for real-time interaction. InMaxmode, it triggers a deep Chain-of-Thought (CoT) to solve high-stakes engineering problems. This prevents token waste on trivial tasks while ensuring precision for complex ones—a critical factor for enterprise cost management.

Compaction API: Intelligent Long-Term Memory Management

To solve token overflow in long-running threads, Anthropic introduced the Compaction API—a sophisticated long-short term memory management technique. This means that when the token limit is approached, the system no longer mechanically truncates history. Instead, it utilizes an intelligent summarization algorithm to compress the history while preserving core instructional logic and decision-making context. This ensures that month-long collaborative projects maintain high memory coherence.

Data Sovereignty and Compliance Controls

To meet the rigid demands of highly regulated industries, Claude Opus 4.6 introduces the inference_geo parameter for granular infrastructure control. Users can force inference to stay within U.S. borders for a 1.1x pricing premium. This feature directly addresses GDPR and HIPAA requirements regarding data residency, removing legal barriers for large-scale enterprise deployment.

Analyzing the Claude Opus 4.6 Performance Benchmarks: A New Industry Standard

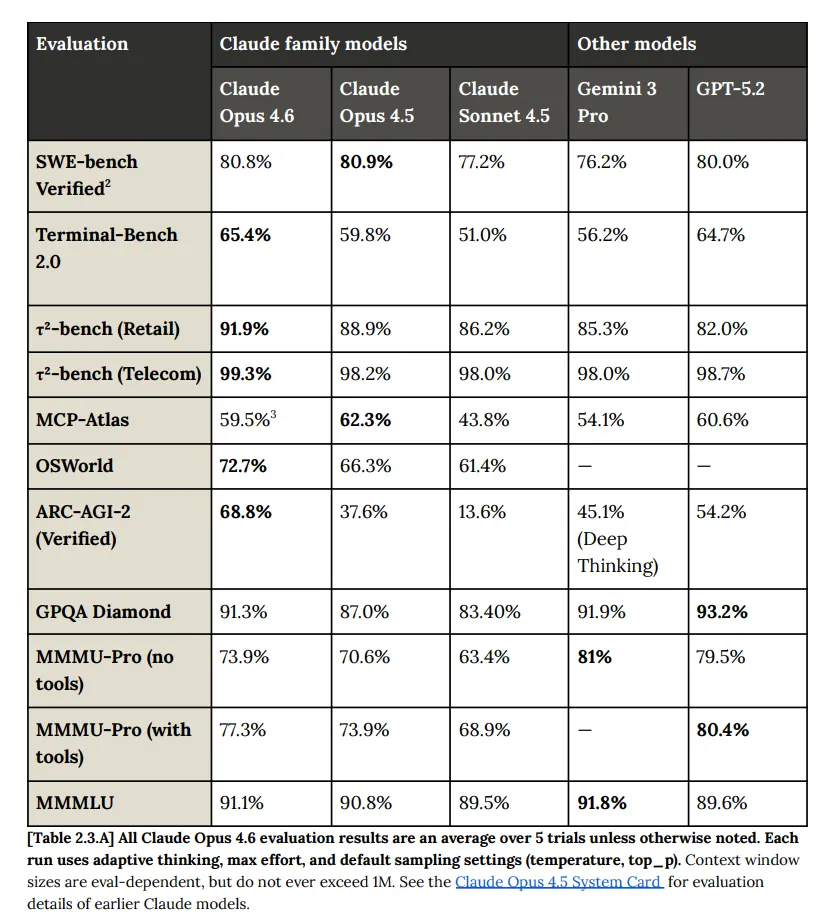

Before reviewing the data from the Anthropic System Card, it is important to define the core metrics used to evaluate Claude Opus 4.6:

- Elo Points: A rating system derived from competitive gaming used to measure a model’s win rate in blind human evaluations. A 144-point lead typically indicates a >70% win rate against a competitor.

- ARC-AGI 2: A benchmark for spatial reasoning on novel tasks, serving as a key indicator of AGI (Artificial General Intelligence) potential.

- Terminal-Bench 2.0: Evaluates the model’s ability to operate like a human developer within a terminal environment (file manipulation, execution, and debugging).

According to official disclosures, Claude Opus 4.6 has set new industry records:

| Categoría | Benchmark | Score | Industry Standing |

| Economic Value Tasks | GDPval-AA | 1606 Elo | Leads GPT-5.2 by ~144 points; superior reliability in Finance/Legal. |

| Logical Generalization | ARC-AGI 2 | 68.80% | Nearly double the score of Opus 4.5 (37.6%). |

| Agentic Coding | Terminal-Bench 2.0 | 65.40% | Highest autonomous coding score in the industry. |

| Expert Knowledge | Humanity’s Last Exam | Top Score | Ranked #1 in cross-disciplinary expert-level reasoning. |

How to Access Claude Opus 4.6

Users can integrate this powerful model through several flexible channels:

Claude Official Channels: Available now for Claude Pro, Team, Enterprise, and the new Max tier users via the web interface.

Inteligencia artificial de iWeaver: Shortly after the launch, Inteligencia artificial de iWeaver integrated Claude Opus 4.6. The advantage of iWeaver over the standard web interface is the lower barrier to entry: users do not need to manage API environments or engineer complex prompts. It also allows for one-click switching between different flagship models based on specific task needs.

API Integration: Developers can call the model via the claude-opus-4-6 identifier. Note that the 1M Context Window is currently in Beta.

Enterprise Cloud Platforms:

- Amazon Bedrock: Supports global node distribution for high-concurrency needs.

- Microsoft Foundry on Azure: Now live in regions such as East US 2.

- Google Cloud Vertex AI: Supports the Adaptive Thinking mode synchronously.

The release of Claude Opus 4.6 represents Anthropic’s success in balancing reasoning precisión con engineering scalability. For professional users handling extreme logic or massive datasets, this model currently offers the most robust solution on the market.