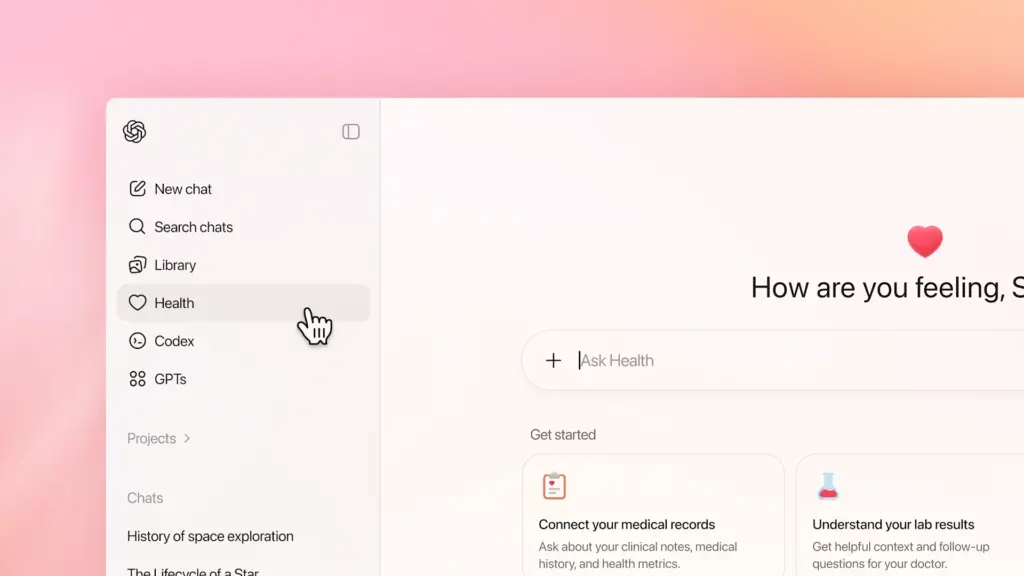

On January 7, 2026, OpenAI officially rolled out a highly anticipated expansion to its ecosystem: ChatGPT Health. This independent interface, embedded within ChatGPT, is designed to help users aggregate personal health data, interpret complex medical information, and prepare effectively for clinical consultations.

For industry observers, this launch represents more than a feature update; it marks a pivotal shift in AI evolution from a “general-purpose assistant” to a “vertical domain expert.” This analysis breaks down ChatGPT Health’s core capabilities, privacy protocols, and the implications for personal health management.

What is ChatGPT Health?

ChatGPT Health is a dedicated, encrypted environment within the OpenAI interface specifically engineered for health and wellness inquiries. Its primary objective is to assist users in organizing, understanding, and tracking complex health metrics.

Unlike the standard ChatGPT model, the Health interface has been fine-tuned for medical rigor and terminology. Crucially, it supports direct integrations with third-party health applications (such as Apple Health, MyFitnessPal, and Oura) and Electronic Health Records (EHR). This allows the AI to provide personalized analysis based on real-world physiological data rather than generic medical advice.

Key Capabilities: What Can It Do?

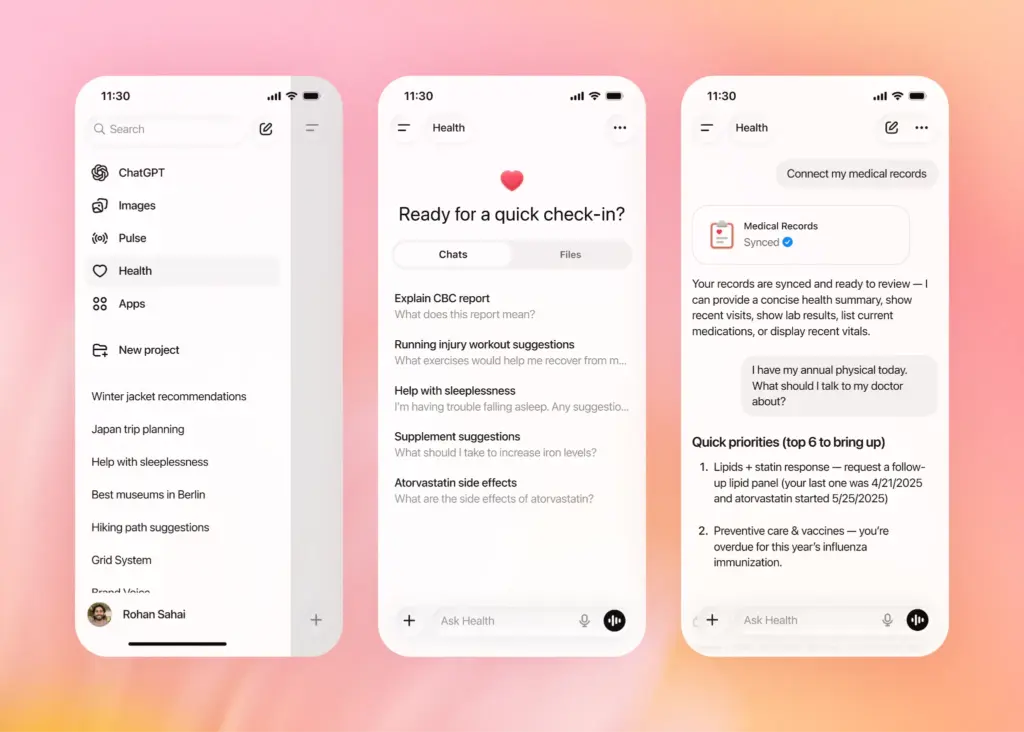

- Multi-Source Data Integration: By breaking down data silos, ChatGPT Health aggregates metrics like step count, sleep quality, and dietary logs. It cross-references this data to identify holistic health trends.

- Medical Document Analysis: Users can upload PDFs or images of lab reports and blood work. The AI extracts key indicators to explain results in plain English.

- Pre-Appointment Preparation: Based on reported symptoms and historical data, the system generates a “Doctor Discussion Guide,” helping patients prioritize questions to maximize limited face-to-face time with clinicians.

- Long-Term Trend Tracking: Beyond snapshot analysis, the AI monitors longitudinal physiological changes to flag potential risks early.

A Real-World Case Study: From Confusion to Precision

Millions of users globally already turn to AI for health queries. To test the efficacy of the new specialized model, I uploaded my recent annual physical results into the system.

The Experience:

“I uploaded a blood work report containing dozens of biochemical markers. ChatGPT Health immediately parsed the data, filtering the noise. It confirmed that key metrics, such as white blood cell count, were within range. However, it flagged that my hemoglobin and ferritin levels were borderline low. Correlating this with the fatigue symptoms I had previously logged, it suggested a potential risk of iron-deficiency anemia.”

Clinical Value:

The AI did not stop at identifying the issue. It provided clinical context, suggested specific dietary adjustments, and outlined follow-up tests to discuss with a provider.

ChatGPT Health allowed a layperson to decipher a complex medical report. During the subsequent doctor’s visit, the dynamic changed from passive listening to active engagement. I was able to point to specific markers and ask targeted questions, extracting maximum value from the brief consultation.

The Controversy: AI Hallucinations and Privacy Concerns

Despite the utility, the integration of AI into healthcare raises valid concerns regarding hallucinations and data security.

- The Risk of AI Hallucinations: Early user feedback suggests that AI can occasionally over-interpret minor symptoms as signs of severe pathology. For users without medical training, this “over-diagnosis” can trigger unnecessary panic and health anxiety.

- Data Privacy & Insurance: A prevailing concern involves the potential monetization of sensitive data. Users fear that uploaded health records could be sold to insurance carriers, potentially leading to claim denials or premium hikes.

How to Use ChatGPT Health Safely: A Strategic Guide

To mitigate these risks, users must establish clear boundaries. Here are three strategies for safe engagement:

The “Co-Pilot” Mindset

Always view ChatGPT Health as a reference tool, not a diagnostic authority. It is a “co-pilot,” not the pilot. Any decisions regarding treatment plans or medication must be validated by a licensed medical professional.

- Note on Mental Health: While the AI can serve as a supportive listener, exercise extreme caution regarding medication advice for psychological conditions.

Trust but Verify: OpenAI’s Privacy Commitments

OpenAI has implemented strict data governance for this specific feature. It’s Help Center provides answers to security questions, including data security, third-party access, and how to stop using or delete the app if problems arise:

- No Model Training: OpenAI states that conversations, files, and memories within the Health workspace are excluded from training future models by default.

- Data Isolation: Health data is stored in a segregated, encrypted environment, separate from standard chat histories.

Human-in-the-Loop & Data Minimization

Despite strict policies, the digital age demands a “data minimization” approach.

- Redact Sensitive Info: When uploading reports, obscure Personally Identifiable Information (PII) such as Social Security Numbers, detailed addresses, or policy numbers.

- Critical Thinking: Treat “abnormal” alerts as cues for professional consultation, not definitive verdicts.

ChatGPT Health grants us the ability to interpret bodily signals, filling a critical void in the current healthcare infrastructure. Particularly in the United States, where high costs, impacted schedules, and complex insurance systems often delay care, many are forced to wait until a condition becomes critical before seeking help.

ChatGPT Health is reshaping this paradigm. It facilitates a shift from reactive treatment to proactive prevention. By offering personalized guidance on diet, exercise, and rehabilitation, it empowers users to secure professional-grade support the moment their body signals a need for attention.