The Great Dilemma of AI Assistants: A Genius Brain Locked in a Room

We are in a golden age of artificial intelligence (AI). Large Language Models (LLMs) are like supremely intelligent advisors, holding mastery over the vast ocean of human knowledge.

However, the way this genius advisor works has a fundamental limitation. Imagine that instead of collaborating with a colleague side-by-side, you are talking to a visitor through a thick pane of glass.

Yes, you can pick up individual files, like a PDF report, from your desk and manually “hand” them over, one at a time. But the AI cannot proactively walk into your office, see the real-time discussions in your team’s Slack channel, or independently access and understand the connections within your local codebase when you need it to. What it possesses is always the static, isolated piece of information you last gave it.

This chasm between “one-off handoffs” and “real-time, seamless collaboration” is the core bottleneck preventing AI from evolving from a smart “Q&A tool” into a true “digital partner.” This disconnect manifests in daily use as frustrating “intelligence gaps”—flaws that arise not because the model isn’t smart enough, but because we haven’t yet provided it with a secure and continuous channel to the right context.

The “Forgetful” AI

Have you ever experienced a scenario where, in a long conversation with an AI assistant, you repeatedly emphasize a key design constraint, only for it to propose a solution minutes later that completely ignores it? This isn’t intentional; it’s a result of its inherent “memory flaws.” Research has revealed a problem known as “Lost in the Middle“. When language models process long texts, their ability to recall information follows a U-shaped curve: they best remember content at the beginning and the end, while information sandwiched in the middle is easily overlooked or “forgotten”. This means the critical information you mentioned mid-conversation falls squarely into the model’s memory blind spot, making it appear to be a “forgetful” collaborator.

The “Confused” and “Distracted” AI

Imagine trying to discuss a complex project with a colleague who is constantly distracted by surrounding chatter, irrelevant noise, and outdated topics. This is precisely what happens when we feed an unfiltered, lengthy conversation history to an AI. Small talk, corrected mistakes, and off-topic discussions are all “noise” relative to the current task. This phenomenon is called “Contextual Distraction“. This irrelevant information distracts the model, causing it to deviate from your core question and ultimately provide an unfocused or off-target answer.

Even worse is “Contextual Drift“. As a conversation evolves, its topic and focus can change. If the AI cannot recognize this shift, it may cling to an outdated context, leading to a misinterpretation of your intent. For example, you may have already revised the initial requirements, but the AI continues to reason based on the old ones, resulting in flawed conclusions.

The impact of these seemingly minor technical flaws is profound. A partner that is forgetful, easily distracted, and frequently misunderstands instructions cannot be entrusted with significant responsibilities. Users will instinctively avoid letting such an AI handle complex, multi-step tasks like refactoring a critical code module or managing a project’s entire lifecycle. Therefore, solving the problem of context management is not just about improving model accuracy; it’s about building user trust. It is the cornerstone for building the next generation of autonomous AI agents (Agentic AI). Only when AI can reliably and accurately access and understand context can it truly step out of that locked room and become our trustworthy partner.

Enter MCP: The “USB-C Port” for AI Applications

Facing the dilemma of AI being trapped in contextual silos, the industry needed an elegant and unified solution. In November 2024, the Model Context Protocol (MCP), led by Anthropic, was introduced. It is not another AI model or application, but an open, open-source standard framework designed to fundamentally change how AI connects with the external world.

The most intuitive way to understand MCP is through the widely-cited metaphor: MCP is the “USB-C port” for AI applications. Think back to the world before USB-C: every device had its own proprietary, incompatible charger and data cable, and our drawers were stuffed with a mess of wires.

USB-C ended this chaos, connecting all devices with a single, unified standard. MCP does the same for the world of AI. It replaces the thousands of “proprietary interfaces”—each custom-developed for a specific tool or data source—with a universal protocol, allowing AI to connect to everything in a “plug-and-play” manner.

This idea of standardization is not new; its success has precedents. The Language Server Protocol (LSP) is the best evidence. The emergence of LSP allowed features like smart suggestions and code completion for various programming languages to be easily integrated into any code editor, saving developers from reinventing the wheel for every editor-language combination. MCP draws on the success of LSP, aiming to replicate this achievement in the AI domain.

The release of MCP quickly garnered a positive response from the entire industry. AI giants like OpenAI and Google DeepMind, as well as key tool developers such as Zed and Sourcegraph, rapidly adopted the standard. This broad consensus signals the formation of a major trend: the industry is moving from siloed efforts toward collaboratively building a more interconnected AI ecosystem.

Thinking deeper, designing MCP as an open protocol rather than a proprietary product was a visionary strategic choice. It prevents any single company from monopolizing the “AI integration layer,” thereby fostering a decentralized ecosystem of competition and innovation. This openness was key to its acceptance by competitors like OpenAI and Google. AI integration is an enormous and fragmented issue, often called the “M×N integration problem” (the challenge of connecting M AI applications with N tools), which no single company can solve alone. By jointly developing an open standard, the giants are collaborating to solve the foundational, undifferentiated problem of connectivity. This allows them to shift their competitive focus to higher-value areas: the quality of their core LLMs, the user experience of their host applications (like ChatGPT vs. Claude), and the capabilities of their first-party MCP servers (like the one GitHub provides for Copilot). This “co-opetition” ultimately benefits the entire industry: tool makers aren’t locked into a single AI platform, and users are free to combine their favorite AI with their preferred tools. This openness is MCP’s most powerful and enduring strategic advantage.

How It Works: A Glimpse Inside

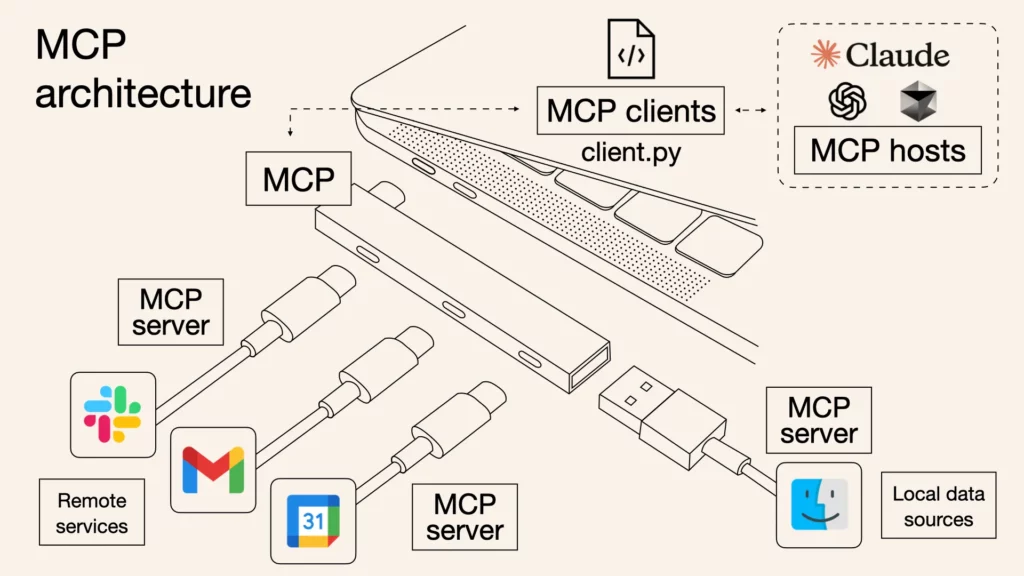

While the MCP protocol is backed by rigorous technical specifications, its core architecture can be simplified into three distinct roles that work together to form a secure and efficient communication system.

- MCP Host: This is the application you interact with directly—the “brain” and command center of the AI assistant. Examples include Claude Desktop, VS Code with Copilot, or any other AI-powered tool. The host manages everything, coordinates communication with various tools, and, most critically, ensures that any action taken by the AI requires your explicit authorization.

- MCP Client: Think of this as a “dedicated interpreter” that the host assigns to each specific tool. When the host wants to interact with both Slack and GitHub simultaneously, it creates two separate, isolated clients. Each client is only responsible for establishing a one-to-one connection with its designated server and uses the MCP protocol for “translation” and communication.

- MCP Server: This is the tool or data source itself, now equipped with an MCP “socket.” A server is a lightweight program that exposes the tool’s capabilities—such as “read a file,” “send a message,” or “query a database”—through a standardized MCP interface for the client to call.

The entire information flow is straightforward: a request from you (e.g., “Summarize the latest report in the project folder and send it to the team channel”) is first received by the host. The host identifies that this requires two tools: the file system and Slack. It then requests the report from the file system server via the file system client, and sends the result to the Slack server via the Slack client, which finally posts it to the designated channel. The entire communication process uses a standardized format called JSON-RPC 2.0, which can be simply understood as a structured “language of requests and responses” that all participants can understand.

The server primarily provides three capabilities: Resources, which are data for the AI to read, like a file or a document; Tools, which are actions for the AI to execute, like running a command or calling an API; and Prompts, which are reusable instruction templates to simplify common workflows.

Imagine this “Host-Client-Server” architecture as a high-security building. The Host (your AI assistant) is the building’s central commander. Each Server (tools like GitHub or Google Drive) is like an individual, secure room within the building, holding valuable assets. So, why have an intermediate Client layer? Imagine if the commander (Host) held a master key to all the rooms (Servers). If the commander’s key were ever compromised, the entire building would be at risk. The designers of MCP anticipated this, so they don’t let the commander open the doors directly. Instead, the commander hires a dedicated, independent security guard (Client) for each room. Each guard holds only the key to the one room they are responsible for.

The brilliance of this design lies in security isolation:

- Least Privilege: The GitHub guard can only enter the GitHub room and has absolutely no way of opening the Google Drive door.

- Risk Containment: Even if the Google Drive guard is tricked by a malicious actor (e.g., the server has a vulnerability or is attacked), the damage is confined to the Google Drive room. It has no effect on the adjacent GitHub room, ensuring other critical operations remain secure.

This is crucial for any organization. Simply put, it’s like dividing your workspace into many independent “fireproof compartments.” If one compartment (like a newly tested tool) has a problem, the trouble is locked within it and will never affect the other compartments holding your core projects (like GitHub). Therefore, you can confidently connect and experiment with new tools without fear of “bringing down” your most critical workflows.

The Path Forward: Unlocking Your AI

The era of AI being trapped on digital islands is ending. As an open, unified standard, the Model Context Protocol (MCP) is building a solid bridge between AI and the real world. What it unlocks is a new future composed of countless tools and capabilities that can be freely combined and orchestrated by intelligent agents. The question is no longer if AI will deeply integrate with our tools, but how—and MCP provides the answer. It is time to join this thriving community and connect your AI to this world of infinite possibilities.

This bridge to the real world has been built, but the journey is just beginning. In the upcoming Part II of this series, we will cross this bridge to dive deep into several real-world MCP use cases, exploring how AI will concretely revolutionize the way we work once it truly breaks free from its contextual chains. Stay tuned.

And you can start with a powerful one today: iWeaver.

Our iWeaver service can be integrated as an MCP server, allowing you to bring its powerful agent capabilities into hosts like Dify, Cursor, and more. This means you can enhance your existing workflows with iWeaver’s unique intelligence, finally transforming your AI assistant from a “genius in a locked room” into a true “digital partner.”

Visit iWeaver.ai to get your MCP endpoint and start building a more powerful AI assistant today.