On February 5, the AI industry witnessed a historic “collision” as Anthropic and OpenAI launched their flagship models—Claude Opus 4.6 そして GPT-5.3 Codex—back-to-back.

When facing such simultaneous high-profile releases, evaluating the winner requires looking past the hype and focusing on objective technical dimensions. I generally break my analysis into three layers: core technical updates, what the benchmarks reveal about their capabilities, and how delivery differs in real-world scenarios. Below, I will use this framework to deconstruct the technical features and empirical performance of these two models.

Analyzing the Breakthroughs in Claude Opus 4.6

Based on my previous research and the latest technical documentation, the evolution of Claude Opus 4.6 centers on several revolutionary architectural updates:

- Adaptive Thinking: This feature allows the model to dynamically allocate computational resources based on task difficulty. In my testing, I found the model responds almost instantaneously to simple queries, while it enters a “deep reasoning” mode for complex architectural designs, taking more time to ensure logical rigor.

- 1-Million Token Context & Compaction API: While the 1-million-token window is massive, the real innovation is the Compaction API. To combat the performance degradation typical of long conversations, this API intelligently compresses historical dialogue by retaining only critical logical nodes. This significantly reduces inference costs for long-term projects.

- Data Residency Controls: This version allows enterprise users to restrict data inference to U.S.-based servers. I view this as a strategic move to address the strict compliance requirements of regulated industries like finance and healthcare.

- 128K Output Length: The maximum single-turn output has been expanded to 128,000 tokens, enabling the model to generate massive codeblocks or entire technical documents in one go without losing coherence.

Decoding the Agentic Strengths of GPT-5.3-Codex

OpenAI’s GPT-5.3-Codex leans heavily into execution speed and system-level interaction. According to the official specifications, the primary highlights include:

- Increased Inference Efficiency: The model operates 25% faster than its predecessor, GPT-5.2 Codex. In my comparative tests, GPT-5.3 Codex demonstrated significantly higher throughput for identical script-generation tasks.

- Mid-turn Steering: This allows users to issue new instructions while the model is executing a long-running task. For instance, if the model is running an automated script in the terminal, I can intervene and correct its path in real-time without restarting the process.

- System-Level Operational Capability: Positioned as an “agentic programming model,” it goes beyond writing code. It has been optimized to use tools at the OS level, manage deployments, and monitor testing environments autonomously.

- Self-Assisted Development: OpenAI revealed that GPT-5.3 Codex was used during its own training and debugging phases. This indicates the model has reached a level of engineering maturity where it can assist in its own iteration.

Comparative Benchmarks: Claude Opus 4.6 vs. GPT-5.3-Codex

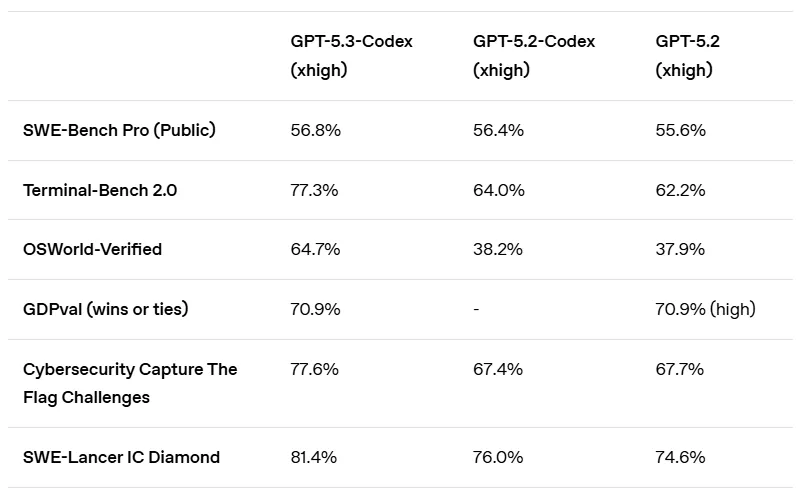

To objectively measure performance, I have selected several industry-standard benchmarks. Here is a brief explanation of what these metrics represent:

- Terminal-Bench 2.0: Evaluates the AI’s ability to execute complex commands and manage tasks within a CLI (Command Line Interface).

- SWE-bench Pro: Measures the success rate of the AI in resolving real-world software engineering issues, such as actual bug fixes on GitHub.

- GDPval-AA: Assesses the model’s proficiency in high-value professional knowledge work, such as financial analysis and legal research.

- OSWorld: Tests the AI’s ability to navigate a GUI (Graphical User Interface) to complete daily office tasks.

- Humanity’s Last Exam: A high-difficulty, multi-disciplinary reasoning test designed to push the boundaries of expert-level knowledge.

| メトリック | Claude Opus 4.6 | GPT-5.3 Codex | Who Win |

| Terminal-Bench 2.0 | 65.40% | 77.30% | GPT-5.3 Codex |

| SWE-bench Pro | Not Disclosed | 57.00% | GPT-5.3 Codex |

| OSWorld | 46.20% | 64.70% | GPT-5.3 Codex |

| GDPval-AA (Elo) | +144 vs Baseline | Baseline | Claude Opus 4.6 |

| Humanity’s Last Exam | Top Score | Not Disclosed | Claude Opus 4.6 |

| コンテキストウィンドウ | 1,000,000 Tokens | ~200,000 Tokens | Claude Opus 4.6 |

| Speed Improvement | Baseline | 0.25 | GPT-5.3 Codex |

Real-World Scenario Analysis: Which Model to Choose?

Based on the technical parameters and data above, I recommend the following for different professional needs:

Choose Claude Opus 4.6 if:

- You are a Software Architect: It is the superior choice for refactoring legacy projects involving hundreds of thousands of lines of code.

- You work in High-Compliance Fields: It performs better in finance or law where logical precision and regulatory adherence are non-negotiable.

- You have zero tolerance for “Hallucinations”: In the latest “Needle In A Haystack” tests, its long-context recall reached 76%, far outpacing competitors.

Choose GPT-5.3 Codex if:

- You are a Full-Stack Developer: It is optimized for sheer development speed and tasks requiring frequent interaction with terminals, databases, and cloud platforms.

- You prefer “Human-in-the-Loop” Coding: The mid-turn steering is perfect for developers who want to adjust the AI’s logic flow through continuous dialogue.

- You specialize in Cybersecurity: As the first model classified with “High-Level Cybersecurity Capability,” it holds a decisive edge in vulnerability detection and defense.

My conclusion regarding this simultaneous release is that both companies have pivoted toward “long-task execution” and “agentic engineering,” albeit with different focuses. Claude Opus 4.6 excels in ultra-long context, session management (Compaction), and enterprise compliance. Conversely, GPT-5.3-Codex dominates in software engineering benchmarks, execution speed, and long-term tool utilization.

For team-level selection, I suggest a simple rule: run an A/B test using your actual internal repositories. Track the success rate, number of revisions, cost, and time-to-delivery rather than relying solely on third-party benchmarks.

For individual users, subscribing to both can be prohibitively expensive. In this case, I recommend using an aggregator like アイウィーバー. It allows you to access both models under a single subscription, enabling you to switch between Claude and GPT instantly until you find the perfect fit for your specific task.